- Moative

- Posts

- Context Engineering for AI is the Enterprise Version of "Santa is Real"

Context Engineering for AI is the Enterprise Version of "Santa is Real"

A POC does not an enterprise make

Stop imagining context as a pile of information you can stuff into a model. Start treating it as a living system with owners, versions, decay, and blast radius

You don’t have an enterprise AI problem. You have an enterprise agreement problem wearing an AI hoodie.

The proof is how quickly we all fell in love with the phrase “context engineering.” It has the calming scent of a deterministic discipline. Engineering suggests you can put context in a box, label the box, and ship the box to production. The box might be JSON. The box might be a vector database. The box might be a “knowledge graph” that someone swears is not just a graph-shaped PowerPoint.

And then you run the POC and it works, which is the most dangerous part, because it creates the precise kind of confidence that should only be available to teenagers and management consultants.

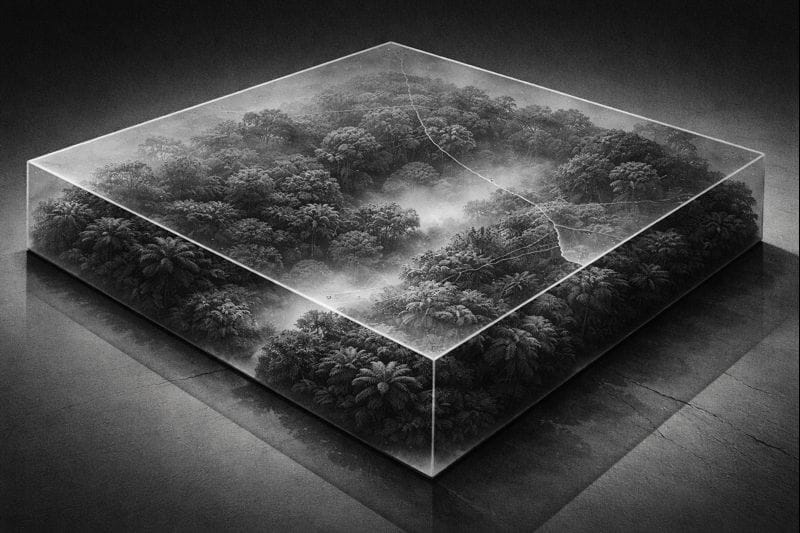

A POC is not the enterprise. A POC is a terrarium. You controlled the temperature. You chose the species. You removed predators. You gave the plant the exact amount of sunlight. Then you declare you’ve understood nature.

Scaling is the moment you take the lid off and discover the enterprise was a rainforest the whole time.

The rainforest is not just “more data.” It’s more contradiction. More exceptions. More tacit rules. More “it depends.” More shadow processes that exist because the official process is a fairytale told at onboarding. Context doesn’t just live in documents. It lives in the fact that the shipping manager and the controller have been quietly disagreeing about what “completed” means for eight years, and everyone has learned to route around the disagreement like it’s a pothole on the way to the office.

That’s why “context engineering” is a sedative. It implies the core challenge is retrieval. But retrieval is the easy part. The hard part is arbitration: deciding which context wins when contexts disagree.

And contexts always disagree.

Context is siloed because organizations are siloed. Context is unstructured because humans communicate in stories and threats and jokes and half-sentences. Context is tribal because enterprises reward speed and survival, not documentation. Context is temporal because policy is a moving target and “the way we do it” becomes “the way we used to do it” without anyone updating the rulebook.

If this sounds familiar, it should, because we’ve been here before. We just called it ERP.

ERP was the original “single source of truth” fantasy. It promised unity. What it delivered in most places was a beautifully normalized database that faithfully reflected the company’s communication failures. The system didn’t unify meaning; it froze whatever meanings each function already had and made them official. Finance had its truth, Sales had its truth, Ops had its truth, and everyone learned the art of reconciliation as a lifestyle.

Conway’s Law wasn’t a clever quote for ERP. It was the instruction manual. Organizations build systems that mirror their communication structures. Not their strategy. Not their values. Their actual communication structure: who trusts whom, who talks to whom, where decisions get made, where escalations go, where exceptions are resolved, and where they are buried.

So ERP didn’t eliminate silos. It laminated them.

Now swap ERP with AI and the outcome gets worse in a very specific way. ERP mostly recorded decisions after the fact. AI recommends decisions in real time, with confidence, in prose, which means it doesn’t just encode your silos but accelerates them. When a system of record is wrong, you reconcile later. When a system of recommendation is wrong, you operationalize the wrong thing faster, with fewer people noticing, because the output sounds like it knows what it’s doing.

This is why enterprise AI rollouts collapse right after the celebratory POC email. The POC worked because it lived in a single function, on a single dataset, with a single champion, under a single definition of success. It was scoped so the enterprise’s contradictions were “out of scope.” A lot of things are “out of scope” right up until they become your entire scope.

The moment you try to go enterprise-wide, you don’t just encounter data gaps. You encounter meaning fractures. Sales and Finance disagree on what a customer is. Legal and Ops disagree on what “approved” means. Compliance and Product disagree on what “risk” means. Everyone agrees on the words, which is the most sinister part, because it creates the illusion of alignment. It’s like two people saying “I love you” while one is talking about romance and the other is talking about snacks.

This is where the modern “context engineering” story usually gets trapped in the shallow end. Teams talk about pipelines and embeddings and chunking strategies as if the core risk is that the model won’t find the right paragraph in a policy document. The core risk is that the policy document is not the policy. The policy is the behavior. The policy is the pattern of overrides. The policy is the CFO’s eyebrow raise when someone suggests recognizing revenue that way. The policy is “ask Priya,” which is a perfectly valid governance mechanism except it doesn’t scale and it definitely doesn’t index.

“All that has to be said has been said. We repeat because people don’t remember.”

That line belongs in every enterprise AI deck, right next to Conway’s Law, because companies keep relearning the same lesson with new tools. Data lakes didn’t unify meaning. Dashboards didn’t unify meaning. ERP didn’t unify meaning. AI will not unify meaning. It will only force the meaning problem into the open, because now the system is speaking back at you, confidently, at scale.

So what does “good” look like if perfect context engineering doesn’t exist?

First, admit the uncomfortable truth that was already stated: nobody actually knows what perfect looks like, because “perfect context” would require an enterprise where definitions never conflict, policies never change, exceptions never happen, and tribal knowledge is fully documented. That’s not a company. That’s a lab experiment.

The goal isn’t perfection. The goal is operability: building an AI rollout that stays useful as the enterprise drifts.

That requires a shift in mental model. Stop imagining context as a pile of information you can stuff into a model. Start treating context as a living system with owners, versions, decay, and blast radius.

In ERP-land, the mature move wasn’t “single source of truth.” It was auditability: you could trace what happened, when, and why, even when the truth was messy. Enterprise AI needs the equivalent: traceability of answers and traceability of decisions. Not because auditors are watching (they are), but because humans need to know whether to trust what they’re seeing.

Traceability in AI means the assistant doesn’t just answer; it shows what it relied on and what it assumed. It means the assistant behaves like a careful controller, not like a charismatic intern. It means you can ask, “What did you base that on?” and get something better than vibes.

Then there’s versioning. Enterprises treat policies like they’re timeless until suddenly they aren’t. If your AI assistant doesn’t understand “what was true when,” it will give you answers that are technically correct and practically wrong—my favorite category. This is where the ERP mindset helps: treat definitions and policies like code. Version them. Tie answers to versions. Make “as-on date” a first-class concept, not an afterthought.

Next comes escalation, the part everyone wants to skip because it sounds slow. In real enterprises, slow is what keeps you alive. The assistant needs to know when it has wandered into judgment territory – where policy conflicts, exceptions dominate, outcomes are sensitive – and route the decision to the right humans with the relevant context already packaged. This is not a failure. This is the system doing the most intelligent thing it can do: admitting that the enterprise itself hasn’t resolved the conflict, and therefore the model shouldn’t pretend it has.

Finally, accountability. Enterprise-wide AI without clear ownership is just a blame diffuser with autocomplete. Every meaningful recommendation implicitly shifts power: who gets to decide, who gets to override, who gets to be wrong. If you don’t design for that explicitly, Conway will do it for you, and you won’t like his design. The assistant will become “owned” by whoever shouts the loudest or whichever team got budget first, and the rest of the enterprise will quietly treat it as someone else’s toy.

Notice what all of this has in common: it’s not primarily about data. It’s about how the organization works and communicates. Which is exactly why ERP is the right parallel. ERP projects succeeded when companies treated them as operating model change with software attached. They failed when companies treated them as software installation with organizational change postponed indefinitely.

Enterprise AI will follow the same pattern, but with a new twist: it will look like it’s working right up until it has quietly taught the organization to trust the wrong thing.

So the real enterprise AI playbook is not “graduate POCs to production.” It’s “graduate local successes into shared meaning.” It’s identifying the fracture points where definitions conflict and judgment lives, and treating those as the core implementation surface, not as edge cases.

If that feels heavier than another POC, good. That heaviness is reality. The enterprise is heavy. The only reason POCs feel light is because they’re not carrying the enterprise yet.

The punchline is simple and a little rude: you can’t out-model a misaligned organization. You can only expose it faster.

And if you design your rollout like ERP taught you – around traceability, versioning, escalation, and ownership – AI stops being a magic trick and starts becoming what it should have been all along: a system that helps the enterprise say what it actually means, even when it doesn’t want to.

Enjoy your Sunday!

More reading

/